every blog every motto: You can do more than you think.

https://blog.csdn.net/weixin_39190382?type=blog

0. 前言

U-KAN来了,快是真的快的,上个月才出的KAN,不得不说快。

先占个坑,有时间细看。

下面放上摘要

1. 正文

下面是摘要

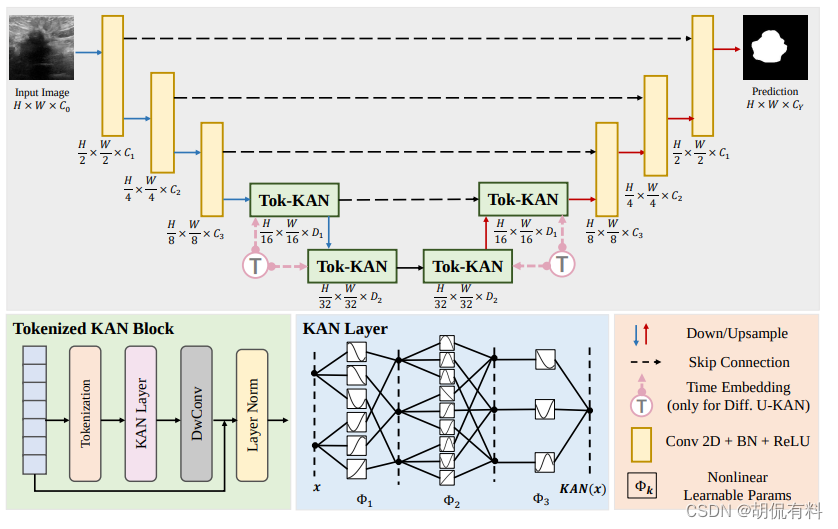

U-Net has become a cornerstone in various visual applications such as image

segmentation and diffusion probability models. While numerous innovative designs and improvements have been introduced by incorporating transformers or

MLPs, the networks are still limited to linearly modeling patterns as well as the

deficient interpretability. To address these challenges, our intuition is inspired by

the impressive results of the Kolmogorov-Arnold Networks (KANs) in terms of

accuracy and interpretability, which reshape the neural network learning via the

stack of non-linear learnable activation functions derived from the KolmogorovAnold representation theorem. Specifically, in this paper, we explore the untapped

potential of KANs in improving backbones for vision tasks. We investigate, modify

and re-design the established U-Net pipeline by integrating the dedicated KAN

layers on the tokenized intermediate representation, termed U-KAN. Rigorous medical image segmentation benchmarks verify the superiority of U-KAN by higher

accuracy even with less computation cost. We further delved into the potential of

U-KAN as an alternative U-Net noise predictor in diffusion models, demonstrating

its applicability in generating task-oriented model architectures. These endeavours

unveil valuable insights and sheds light on the prospect that with U-KAN, you can

make strong backbone for medical image segmentation and generation