Title

题目

Automatic motion artefact detection in brain T1-weighted magnetic resonance images from a clinical data warehouse using synthetic data

《利用合成数据从临床数据仓库中自动检测脑部T1加权磁共振图像中的运动伪影》

Background

背景

近年来,医院建立了临床数据仓库(CDWs),收集了大量的医学图像。这些图像质量参差不齐,特别是磁共振图像(MRI)容易受到患者运动引起的伪影影响。由于MRI采集时间长,患者在检查过程中常会移动,导致伪影(如模糊、鬼影、振铃效应),这对后续的神经影像学分析产生重大影响。传统的自动质量控制工具通常依赖神经影像软件包,但这些工具只能处理高质量图像,不适用于CDWs。手动标注运动伪影也具有挑战性,因此,模拟运动伪影成为了一种自动生成可靠标注数据的方法。为了弥补合成数据和实际临床图像之间的差距,迁移学习被引入其中,通过预训练和微调模型,从研究数据中学习到的运动伪影检测知识应用于临床数据中。

翻译:近年来,医院创建了临床数据仓库(CDWs),收集了大量患者的医学图像。这些图像质量差异很大,尤其是磁共振图像(MRI)容易因患者在采集过程中移动而产生伪影。由于MRI采集时间较长,患者在检查期间可能会移动,导致重建图像中出现伪影,如模糊、振铃和鬼影。这对进一步的神经影像分析造成了严重影响。传统的自动质量控制工具依赖于神经影像软件包,但它们只能用于高质量图像,不适用于CDWs。此外,手动标注运动伪影非常困难,因此出现了模拟运动伪影的想法,这可以自动生成可靠的标注数据。为了缩小合成数据与实际临床数据之间的差距,迁移学习被用于将从研究数据中学到的知识应用于临床数据的运动伪影检测。我们是第一个利用运动模拟和研究数据来解决常规临床数据中运动伪影检测问题的研究者。

Aastract

摘要

Containing the medical data of millions of patients, clinical data warehouses (CDWs) represent a greatopportunity to develop computational tools. Magnetic resonance images (MRIs) are particularly sensitive topatient movements during image acquisition, which will result in artefacts (blurring, ghosting and ringing) inthe reconstructed image. As a result, a significant number of MRIs in CDWs are corrupted by these artefactsand may be unusable. Since their manual detection is impossible due to the large number of scans, it isnecessary to develop tools to automatically exclude (or at least identify) images with motion in order to fullyexploit CDWs. In this paper, we propose a novel transfer learning method from research to clinical data for theautomatic detection of motion in 3D T1-weighted brain MRI. The method consists of two steps: a pre-trainingon research data using synthetic motion, followed by a fine-tuning step to generalise our pre-trained modelto clinical data, relying on the labelling of 4045 images. The objectives were both (1) to be able to excludeimages with severe motion, (2) to detect mild motion artefacts. Our approach achieved excellent accuracy forthe first objective with a balanced accuracy nearly similar to that of the annotators (balanced accuracy>80 %).However, for the second objective, the performance was weaker and substantially lower than that of humanraters. Overall, our framework will be useful to take advantage of CDWs in medical imaging and highlight theimportance of a clinical validation of models trained on research data.

包含数百万患者医疗数据的临床数据仓库(CDWs)为开发计算工具提供了极大的机会。磁共振图像(MRIs)在图像采集过程中对患者的移动特别敏感,这会导致重建图像中出现伪影(模糊、鬼影和振铃效应)。因此,临床数据仓库中的大量MRI图像受到这些伪影的影响,可能无法使用。由于扫描数量庞大,手动检测这些伪影几乎不可能,因此需要开发工具来自动排除(或至少识别)带有运动伪影的图像,以充分利用临床数据仓库。在本文中,我们提出了一种从研究数据到临床数据的自动检测3D T1加权脑MRI运动伪影的新型迁移学习方法。该方法分为两个步骤:首先是在研究数据上进行预训练,使用合成运动伪影,然后进行微调步骤,以将预训练模型推广到临床数据上,依赖于对4045张图像的标注。我们的目标是:(1)能够排除运动严重的图像;(2)检测轻度运动伪影。我们的方法在第一个目标上取得了优异的准确率,平衡准确率接近标注者的水平(平衡准确率* > *80%)。然而,在第二个目标上,性能较弱,明显低于人工评估者的表现。总体而言,我们的框架将有助于在医学影像中充分利用临床数据仓库,并强调对基于研究数据训练的模型进行临床验证的重要性。

Method

方法

We developed an approach based on the generation of syntheticmotion to improve the detection of motion artefacts in clinical T1wbrain MR images. We used T1w images, which were acquired withscanners from different manufacturers and different magnetic fields,from publicly available research data sources as well as from a CDW.Motion artefacts were synthetically generated by applying both image and k-space based approaches using rigid body transformationsto simulate different severity degrees of artefacts. CNNs were firsttrained on research databases to recognise synthetic motion, and theirperformance was evaluated on real motion. We generalised our modelto the CDW by applying an efficient transfer learning technique.

我们开发了一种基于生成合成运动的技术,以提高对临床T1加权脑部磁共振图像中运动伪影的检测能力。我们使用了来自不同制造商的扫描仪和不同磁场强度下采集的T1加权图像,这些图像既来自公开的研究数据源,也来自临床数据仓库(CDW)。通过使用刚体变换,采用图像和k空间两种方法合成了不同严重程度的伪影。首先,卷积神经网络(CNNs)在研究数据库上训练,以识别合成的运动伪影,并在实际运动数据上评估其性能。随后,我们通过应用一种高效的迁移学习技术,将模型推广到CDW数据中。

Conclusion

结论

In this study, we proposed a transfer learning framework fromresearch to clinical data for the automatic detection of motion artefactsof 3D T1w brain MRI which was validated on a large clinical data warehouse. We trained and validated different CNNs on a research datasetcomprising images from three publicly available databases using motion simulation and we successfully tested them on an independent testset with synthetic and real motion. We were able to generalise our pretrained model to clinical images thanks to the motion labelling of 4045MRIs. Our deep learning classifier was almost as reliable as manualrating for the detection of severe motion artefacts. Our work demonstrated the usefulness of synthetic motion to improve the detection ofmotion artefacts in MRI, as well as the crucial need of transfer learningto generalise models trained on research to routine clinical data.

在本研究中,我们提出了一种从研究数据到临床数据的迁移学习框架,用于自动检测3D T1加权脑部MRI中的运动伪影,并在一个大型临床数据仓库中进行了验证。我们在包含三个公开数据库图像的研究数据集上,使用运动模拟训练和验证了不同的卷积神经网络(CNNs),并成功地在一个包含合成和实际运动的独立测试集上进行了测试。通过对4045例MRI的运动标注,我们成功将预训练模型推广到临床图像中。对于严重运动伪影的检测,我们的深度学习分类器的可靠性几乎与人工标注相当。我们的研究表明,合成运动对提高MRI运动伪影检测的有用性,同时也凸显了迁移学习在将研究数据训练的模型推广到常规临床数据中的关键作用。

Results

结果

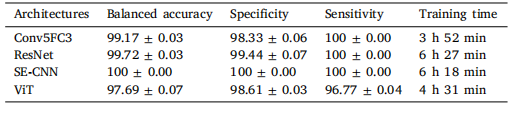

4.1. Validation on research data

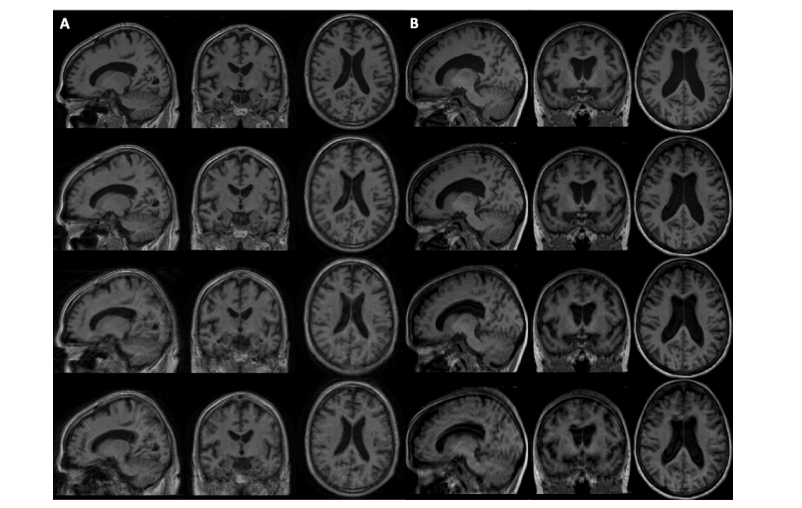

The ability of deep learning models to detect motion was firstassessed using images from the research dataset corrupted with synthetic motion. Fig. 2 displays three corrupted images obtained with theimage and the k-space approach using different translation and rotationranges, and the original image without any motion.We started by evaluating the performance of our Conv5FC3 modeltrained on synthetic motion when applied to our synthetic independenttest set corrupted with different motion severity degrees (rotation:[2◦ , 8◦ ]; translation: [2 mm, 8 mm]). We studied the influence ofthe translation and rotation ranges by performing several experimentswith different motion severity degrees. We first trained a model withsynthetic severe motion by applying a large rotation and translationrange ([6◦ , 8◦ ]; [6 mm, 8 mm]). The balanced accuracy (BA) on ourindependent test set is excellent with both motion simulation techniques (>98%). We also obtained very good results for smaller rangesof rotation and translation as reported in Table 4. A ROC analysis wasperformed for these experiments on the research dataset and the AUCvalues consistently exceeded 0.98, indicating an excellent ability todistinguish between true positives and false positives, regardless of therotation and translation parameters used (Fig. S5).Then, we evaluated the ability of these models to detect real motion. As mentioned in Section 3.1.1, we defined a test set accordingto the IPMOTION score. Our models were perfectly able to detectmotion on these images. No notable differences were noted in termsof performance between the two simulation techniques (Table 4).We also compared the performance obtained by different architectures on the test set corrupted with synthetic motion. In Table 5,we report the results of the four architectures trained using k-spacebased motion simulation with the following parameters: rotation: [2◦ ,4 ◦ ]; translation: [2 mm, 4 mm], as these led to the best results onsynthetic and real motion for the Conv5FC3 architecture. The results ofthe ResNet and the SE-CNN were comparable to that of the Conv5FC3with a BA>99%, whereas the ViT BA was lower (BA = 97.69%). Thus,more complex networks did not provide any notable improvement. Thesame conclusion was reached for the image-based motion simulationtechnique (Table S2).

4.1. 在研究数据上的验证

首先,我们使用受合成运动影响的研究数据集中的图像评估了深度学习模型检测运动伪影的能力。图2展示了通过图像和k空间方法,使用不同的平移和旋转范围生成的三幅带有伪影的图像,以及一幅没有任何运动的原始图像。

我们首先评估了在不同运动严重程度下受合成运动影响的独立测试集上,Conv5FC3模型的性能(旋转范围:[2°,8°];平移范围:[2 mm,8 mm])。我们通过进行多次实验,研究了不同运动严重程度下平移和旋转范围的影响。首先,我们通过应用较大范围的旋转和平移([6°,8°];[6 mm,8 mm])训练了一个模型,用于合成严重运动伪影检测。在独立测试集上的平衡准确率(BA)在两种运动模拟技术下均非常优秀( > 98%)。对于较小的旋转和平移范围,我们也得到了非常好的结果,如表4所示。在这些实验中,对研究数据集进行了ROC分析,AUC值始终超过0.98,表明无论使用的旋转和平移参数如何,该模型均具有卓越的区分真阳性和假阳性的能力(图S5)。

随后,我们评估了这些模型检测实际运动伪影的能力。如3.1.1节所述,我们根据IPMOTION评分定义了一个测试集。我们的模型能够完美地检测这些图像中的运动伪影。在性能上,两种运动模拟技术之间没有显著差异(见表4)。

我们还比较了不同架构在带有合成运动伪影的测试集上的性能。在表5中,我们报告了使用以下参数进行k空间运动模拟训练的四种架构的结果:旋转:[2°,4°];平移:[2 mm,4 mm],因为这些参数在合成和实际运动伪影检测中对于Conv5FC3架构的效果最佳。ResNet和SE-CNN的结果与Conv5FC3相当,平衡准确率(BA)均>99%,而ViT的BA较低(BA = 97.69%)。因此,更复杂的网络并未提供显著的性能提升。同样的结论也适用于基于图像的运动模拟技术(见表S2)。

Figure

图

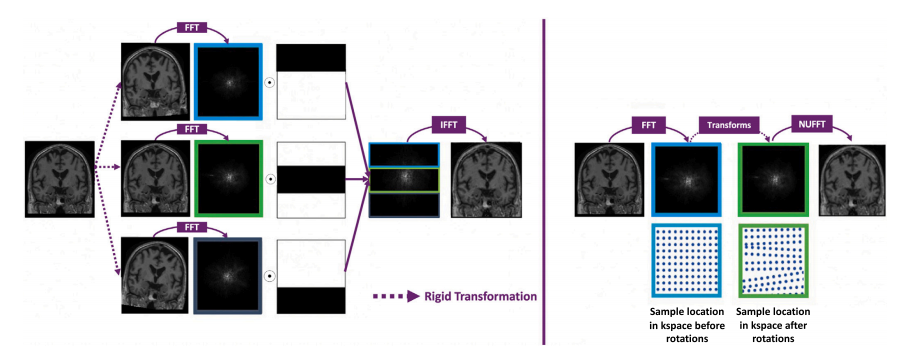

Fig. 1. Left: Image-based motion simulation. (1) 𝑁 𝑡 rigid transformations of the motion-free image are applied (here 𝑁 𝑡 = 2), (2) Fast Fourier transform (FFT) of the originaland 𝑁 𝑡 transformed images, (3) Concatenation of the 𝑁 𝑡 + 1 blocks to create a new k-space, (4) Inverse FFT (IFFT) to obtain the motion corrupted image. Right: k-space basedmotion simulation. (1) FFT of the motion-free image. (2) Transformation (rotation + translation) for each point of the time course. (3) Non uniform FFT (NUFFT) to reconstructthe corrupted image (because of the non uniform sample spacing due to the rotation in the k-space)

图1. 左:基于图像的运动模拟。(1) 对无运动伪影的图像应用 𝑁𝑡 次刚体变换(此处 𝑁𝑡 = 2),(2) 对原始图像和 𝑁𝑡 次变换后的图像进行快速傅里叶变换(FFT),(3) 将 𝑁𝑡 + 1 个块拼接以创建一个新的k空间,(4) 进行逆傅里叶变换(IFFT)以获得带有运动伪影的图像。右:基于k空间的运动模拟。(1) 对无运动伪影图像进行FFT,(2) 对时间序列中的每个点进行变换(旋转+平移),(3) 使用非均匀快速傅里叶变换(NUFFT)重建带有伪影的图像(由于k空间旋转导致的非均匀采样间隔)。

Fig. 2. Example of motion simulation on brain MRI using the k-space (A) and the image (B) based approach with different translation and rotation ranges. From top to bottom:motion-free MRI, MRIs corrupted with a rotation of 3◦ , 5◦ and 7◦ and a translation of 3 mm, 5 mm and 7 mm.

图2. 使用基于k空间(A)和图像(B)的方法对脑部MRI进行运动模拟的示例,应用了不同的平移和旋转范围。自上而下:无运动伪影的MRI,分别受到3°、5°和7°旋转以及3 mm、5 mm和7 mm平移影响的MRI。

Fig. 3. Receiver operating characteristic curves (ROC) for the detection of severe(mov01vs2) and moderate (mov0vs1) motion in 3D T1w MRIs.

图3. 3D T1加权磁共振图像中严重运动(mov01vs2)和中度运动(mov0vs1)检测的受试者工作特征曲线(ROC)。

Table

表

Table 1Distribution of the sex and age over the research (ADNI, MSSEG and MNI BITE) and the clinical (AP-HP) datasets.

表1研究数据集(ADNI、MSSEG 和 MNI BITE)与临床数据集(AP-HP)中性别和年龄的分布情况。

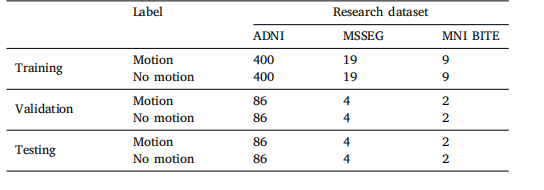

Table 2Distribution of the training, validation and test sets separately for the synthetic motiondetection task using the research dataset comprising images from the ADNI, MSSEGand MNI BITE databases

表2用于合成运动检测任务的训练集、验证集和测试集的分布情况,数据集来自ADNI、MSSEG和MNI BITE数据库的研究数据集。

Table 3Distribution of the training validation and test sets separately for the two fine-tuningtasks on the AP-HP CDW: the detection of severe (Mov01vs2) and moderate (Mov0vs1)motion.

表3AP-HP临床数据仓库(CDW)中两项微调任务的训练集、验证集和测试集的分布情况:严重运动(Mov01vs2)和中度运动(Mov0vs1)伪影检测。

Table 4Results for the detection of synthetic and real motion in T1w brain MRIs from the research dataset. For the validation on synthetic motion, we report the meanand the empirical standard deviation across the five folds for the balanced accuracy, specificity and sensitivity. For the detection of real motion, only the accuracyobtained by the best model of the 5-fold CV was reported as our independent test set contained only images with motion. Results are detailed for both simulationapproaches: image and k-space based

表4研究数据集中T1加权脑MRI合成运动和实际运动检测结果。对于合成运动的验证,我们报告了五折交叉验证中的平衡准确率、特异性和敏感性的平均值及经验标准偏差。对于实际运动的检测,报告了5折交叉验证中最佳模型的准确率,因为我们的独立测试集中仅包含带有运动伪影的图像。结果分别针对基于图像和k空间的模拟方法进行了详细说明。

Table 5Results of four different CNN classifiers (Conv5 FC3, ResNet, SE-CNN and ViT) trainedand tested on MRIs from the research dataset corrupted with k-space based motionsimulation.

表5四种不同的卷积神经网络分类器(Conv5 FC3、ResNet、SE-CNN和ViT)在研究数据集上进行k空间运动模拟伪影训练和测试的结果。

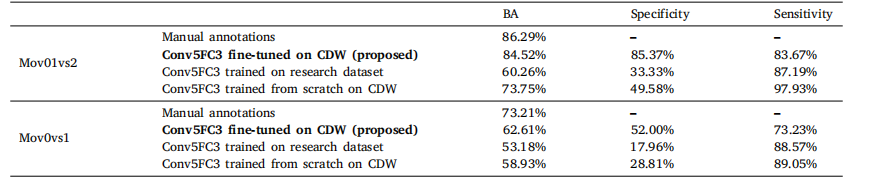

Table 6Detection of motion artefacts within brain T1w MR images of the CDW. For both the detection of severe motion (Mov01vs2) and mild motion(Mov0vs1), we report: the agreement between human raters and the consensus (manual annotations), results of the proposed approach (pretraining on image-based synthetic motion from research data and fine-tuning on CDW), results when training on image-based synthetic motionfrom research datasets without fine-tuning, and results when training from scratch on CDW.

表6在CDW的T1加权脑MRI图像中检测运动伪影的结果。对于严重运动(Mov01vs2)和轻度运动(Mov0vs1)的检测,我们报告了以下内容:人工标注者与共识(手动标注)之间的一致性、所提出方法的结果(在基于研究数据的图像合成运动上预训练并在CDW上进行微调)、仅在研究数据集上的基于图像的合成运动训练但未进行微调的结果,以及在CDW上从零开始训练的结果。

Table 7Comparative study between our proposed method and state-of-the-art (SOTA) motionartefact detection methods (for the task Mov01vs2)

表7我们提出的方法与最先进(SOTA)的运动伪影检测方法(用于Mov01vs2任务)的比较研究。