心路历程(可略过)

为了能在arm64上跑通yolov8,我试过很多很多代码,太多对库版本的要求太高了;

比如说有一个是需要依赖onnx库的,(https://github.com/UNeedCryDear/yolov8-opencv-onnxruntime-cpp)

运行成功了报错error: IOrtSessionOptionsAppendExecutionProvider CUDA’ was not declare

d in this scope,一查是不仅需要onnx库,还需要gpu版本的onnx库

因为这个函数是onnxgpu里才有的函数OrtStatus* status = OrtSessionOptionsAppendExecutionProvider_CUDA(_OrtSessionOptions, cudaID);

而onnxruntime的官方下载地址(https://github.com/microsoft/onnxruntime/releases/)

只有这个版本可以用,但是这个并不是onnxruntime的gpu版本,我在论坛上上搜到onnx官方是不提供nvidia gpu的库的,所以需要自己编译。

我就尝试自己编译,结果有各种各样的版本不匹配的问题,先是说opencv版本低,然后又是杂七杂八的。我都按照要求升级了,最后来一个gcc版本也得升级,那我真是得放弃了,因为当前硬件得这些基础环境是不能改变的,我只能放弃上面这个关于onnxruntime的yolov8代码;(所以得到一个经验,这种大型的库最好直接下载官方现成的,自己编译真的非常麻烦,不到万不得已的时候建议直接换代码,这种版本匹配与编译的问题是最难解决的)

好在很幸运,找到了一个轻量级的能在nvidia arm64硬件上成功运行的轻量级c++yolov8代码,非常简洁好用,不需要依赖杂七杂八的库,可以说直接用jetpack默认的库就能可以简单编译而成,能找到非常不容易,下面是全部代码。

-

jetpack版本

-

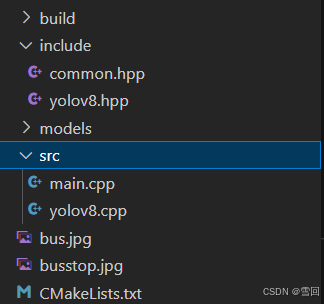

文件结构

-

main.cpp

//

// Created by triple-Mu on 24-1-2023.

// Modified by Q-engineering on 6-3-2024

//#include "chrono"

#include "opencv2/opencv.hpp"

#include "yolov8.hpp"using namespace std;

using namespace cv;//#define VIDEOcv::Size im_size(640, 640);

const int num_labels = 80;

const int topk = 100;

const float score_thres = 0.25f;

const float iou_thres = 0.65f;int main(int argc, char** argv)

{float f;float FPS[16];int i, Fcnt=0;cv::Mat image;std::chrono::steady_clock::time_point Tbegin, Tend;if (argc < 3) {fprintf(stderr,"Usage: ./YoloV8_RT [model_trt.engine] [image or video path] \n");return -1;}const string engine_file_path = argv[1];const string imagepath = argv[2];for(i=0;i<16;i++) FPS[i]=0.0;cout << "Set CUDA...\n" << endl;//wjp// cudaSetDevice(0);cudaStream_t(0);cout << "Loading TensorRT model " << engine_file_path << endl;cout << "\nWait a second...." << std::flush;auto yolov8 = new YOLOv8(engine_file_path);cout << "\rLoading the pipe... " << string(10, ' ')<< "\n\r" ;cout << endl;yolov8->MakePipe(true);#ifdef VIDEOVideoCapture cap(imagepath);if (!cap.isOpened()) {cerr << "ERROR: Unable to open the stream " << imagepath << endl;return 0;}

#endif // VIDEOwhile(1){

#ifdef VIDEOcap >> image;if (image.empty()) {cerr << "ERROR: Unable to grab from the camera" << endl;break;}

#elseimage = cv::imread(imagepath);

#endifyolov8->CopyFromMat(image, im_size);std::vector<Object> objs;Tbegin = std::chrono::steady_clock::now();yolov8->Infer();Tend = std::chrono::steady_clock::now();yolov8->PostProcess(objs, score_thres, iou_thres, topk, num_labels);yolov8->DrawObjects(image, objs);//calculate frame ratef = std::chrono::duration_cast <std::chrono::milliseconds> (Tend - Tbegin).count();cout << "Infer time " << f << endl;if(f>0.0) FPS[((Fcnt++)&0x0F)]=1000.0/f;for(f=0.0, i=0;i<16;i++){ f+=FPS[i]; }putText(image, cv::format("FPS %0.2f", f/16),cv::Point(10,20),cv::FONT_HERSHEY_SIMPLEX,0.6, cv::Scalar(0, 0, 255));//show output// imshow("Jetson Orin Nano- 8 Mb RAM", image);// char esc = cv::waitKey(1);// if(esc == 27) break;imwrite("./out.jpg", image);return 0;}cv::destroyAllWindows();delete yolov8;return 0;

}- yolov8.cpp

//

// Created by triple-Mu on 24-1-2023.

// Modified by Q-engineering on 6-3-2024

//#include "yolov8.hpp"

#include <cuda_runtime_api.h>

#include <cuda.h>//----------------------------------------------------------------------------------------

//using namespace det;

//----------------------------------------------------------------------------------------

const char* class_names[] = {"person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat", "traffic light","fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep", "cow","elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee","skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard","tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple","sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch","potted plant", "bed", "dining table", "toilet", "tv", "laptop", "mouse", "remote", "keyboard", "cell phone","microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors", "teddy bear","hair drier", "toothbrush"

};

//----------------------------------------------------------------------------------------

YOLOv8::YOLOv8(const std::string& engine_file_path)

{std::ifstream file(engine_file_path, std::ios::binary);assert(file.good());file.seekg(0, std::ios::end);auto size = file.tellg();file.seekg(0, std::ios::beg);char* trtModelStream = new char[size];assert(trtModelStream);file.read(trtModelStream, size);file.close();initLibNvInferPlugins(&this->gLogger, "");this->runtime = nvinfer1::createInferRuntime(this->gLogger);assert(this->runtime != nullptr);this->engine = this->runtime->deserializeCudaEngine(trtModelStream, size);assert(this->engine != nullptr);delete[] trtModelStream;this->context = this->engine->createExecutionContext();assert(this->context != nullptr);cudaStreamCreate(&this->stream);this->num_bindings = this->engine->getNbBindings();for (int i = 0; i < this->num_bindings; ++i) {Binding binding;nvinfer1::Dims dims;nvinfer1::DataType dtype = this->engine->getBindingDataType(i);std::string name = this->engine->getBindingName(i);binding.name = name;binding.dsize = type_to_size(dtype);bool IsInput = engine->bindingIsInput(i);if (IsInput) {this->num_inputs += 1;dims = this->engine->getProfileDimensions(i, 0, nvinfer1::OptProfileSelector::kMAX);binding.size = get_size_by_dims(dims);binding.dims = dims;this->input_bindings.push_back(binding);// set max opt shapethis->context->setBindingDimensions(i, dims);}else {dims = this->context->getBindingDimensions(i);binding.size = get_size_by_dims(dims);binding.dims = dims;this->output_bindings.push_back(binding);this->num_outputs += 1;}}

}

//----------------------------------------------------------------------------------------

YOLOv8::~YOLOv8()

{this->context->destroy();this->engine->destroy();this->runtime->destroy();cudaStreamDestroy(this->stream);for (auto& ptr : this->device_ptrs) {CHECK(cudaFree(ptr));}for (auto& ptr : this->host_ptrs) {CHECK(cudaFreeHost(ptr));}

}

//----------------------------------------------------------------------------------------

void YOLOv8::MakePipe(bool warmup)

{

#ifndef CUDART_VERSION

#error CUDART_VERSION Undefined!

#endiffor (auto& bindings : this->input_bindings) {void* d_ptr;

#if(CUDART_VERSION < 11000)CHECK(cudaMalloc(&d_ptr, bindings.size * bindings.dsize));

#elseCHECK(cudaMallocAsync(&d_ptr, bindings.size * bindings.dsize, this->stream));

#endifthis->device_ptrs.push_back(d_ptr);}for (auto& bindings : this->output_bindings) {void * d_ptr, *h_ptr;size_t size = bindings.size * bindings.dsize;

#if(CUDART_VERSION < 11000)CHECK(cudaMalloc(&d_ptr, bindings.size * bindings.dsize));

#elseCHECK(cudaMallocAsync(&d_ptr, bindings.size * bindings.dsize, this->stream));

#endifCHECK(cudaHostAlloc(&h_ptr, size, 0));this->device_ptrs.push_back(d_ptr);this->host_ptrs.push_back(h_ptr);}if (warmup) {for (int i = 0; i < 10; i++) {for (auto& bindings : this->input_bindings) {size_t size = bindings.size * bindings.dsize;void* h_ptr = malloc(size);memset(h_ptr, 0, size);CHECK(cudaMemcpyAsync(this->device_ptrs[0], h_ptr, size, cudaMemcpyHostToDevice, this->stream));free(h_ptr);}this->Infer();}}

}

//----------------------------------------------------------------------------------------

void YOLOv8::Letterbox(const cv::Mat& image, cv::Mat& out, cv::Size& size)

{const float inp_h = size.height;const float inp_w = size.width;float height = image.rows;float width = image.cols;float r = std::min(inp_h / height, inp_w / width);int padw = std::round(width * r);int padh = std::round(height * r);cv::Mat tmp;if ((int)width != padw || (int)height != padh) {cv::resize(image, tmp, cv::Size(padw, padh));}else {tmp = image.clone();}float dw = inp_w - padw;float dh = inp_h - padh;dw /= 2.0f;dh /= 2.0f;int top = int(std::round(dh - 0.1f));int bottom = int(std::round(dh + 0.1f));int left = int(std::round(dw - 0.1f));int right = int(std::round(dw + 0.1f));cv::copyMakeBorder(tmp, tmp, top, bottom, left, right, cv::BORDER_CONSTANT, {114, 114, 114});cv::dnn::blobFromImage(tmp, out, 1 / 255.f, cv::Size(), cv::Scalar(0, 0, 0), true, false, CV_32F);this->pparam.ratio = 1 / r;this->pparam.dw = dw;this->pparam.dh = dh;this->pparam.height = height;this->pparam.width = width;;

}

//----------------------------------------------------------------------------------------

void YOLOv8::CopyFromMat(const cv::Mat& image)

{cv::Mat nchw;auto& in_binding = this->input_bindings[0];auto width = in_binding.dims.d[3];auto height = in_binding.dims.d[2];cv::Size size{width, height};this->Letterbox(image, nchw, size);this->context->setBindingDimensions(0, nvinfer1::Dims{4, {1, 3, height, width}});CHECK(cudaMemcpyAsync(this->device_ptrs[0], nchw.ptr<float>(), nchw.total() * nchw.elemSize(), cudaMemcpyHostToDevice, this->stream));

}

//----------------------------------------------------------------------------------------

void YOLOv8::CopyFromMat(const cv::Mat& image, cv::Size& size)

{cv::Mat nchw;this->Letterbox(image, nchw, size);this->context->setBindingDimensions(0, nvinfer1::Dims{4, {1, 3, size.height, size.width}});CHECK(cudaMemcpyAsync(this->device_ptrs[0], nchw.ptr<float>(), nchw.total() * nchw.elemSize(), cudaMemcpyHostToDevice, this->stream));

}

//----------------------------------------------------------------------------------------

void YOLOv8::Infer()

{this->context->enqueueV2(this->device_ptrs.data(), this->stream, nullptr);for (int i = 0; i < this->num_outputs; i++) {size_t osize = this->output_bindings[i].size * this->output_bindings[i].dsize;CHECK(cudaMemcpyAsync(this->host_ptrs[i], this->device_ptrs[i + this->num_inputs], osize, cudaMemcpyDeviceToHost, this->stream));}cudaStreamSynchronize(this->stream);

}

//----------------------------------------------------------------------------------------

void YOLOv8::PostProcess(std::vector<Object>& objs, float score_thres, float iou_thres, int topk, int num_labels)

{objs.clear();auto num_channels = this->output_bindings[0].dims.d[1];auto num_anchors = this->output_bindings[0].dims.d[2];auto& dw = this->pparam.dw;auto& dh = this->pparam.dh;auto& width = this->pparam.width;auto& height = this->pparam.height;auto& ratio = this->pparam.ratio;std::vector<cv::Rect> bboxes;std::vector<float> scores;std::vector<int> labels;std::vector<int> indices;cv::Mat output = cv::Mat(num_channels, num_anchors, CV_32F, static_cast<float*>(this->host_ptrs[0]));output = output.t();for (int i = 0; i < num_anchors; i++) {auto row_ptr = output.row(i).ptr<float>();auto bboxes_ptr = row_ptr;auto scores_ptr = row_ptr + 4;auto max_s_ptr = std::max_element(scores_ptr, scores_ptr + num_labels);float score = *max_s_ptr;if (score > score_thres) {float x = *bboxes_ptr++ - dw;float y = *bboxes_ptr++ - dh;float w = *bboxes_ptr++;float h = *bboxes_ptr;float x0 = clamp((x - 0.5f * w) * ratio, 0.f, width);float y0 = clamp((y - 0.5f * h) * ratio, 0.f, height);float x1 = clamp((x + 0.5f * w) * ratio, 0.f, width);float y1 = clamp((y + 0.5f * h) * ratio, 0.f, height);int label = max_s_ptr - scores_ptr;cv::Rect_<float> bbox;bbox.x = x0;bbox.y = y0;bbox.width = x1 - x0;bbox.height = y1 - y0;bboxes.push_back(bbox);labels.push_back(label);scores.push_back(score);}}#ifdef BATCHED_NMScv::dnn::NMSBoxesBatched(bboxes, scores, labels, score_thres, iou_thres, indices);

#elsecv::dnn::NMSBoxes(bboxes, scores, score_thres, iou_thres, indices);

#endifint cnt = 0;for (auto& i : indices) {if (cnt >= topk) {break;}Object obj;obj.rect = bboxes[i];obj.prob = scores[i];obj.label = labels[i];objs.push_back(obj);cnt += 1;}

}

//----------------------------------------------------------------------------------------

void YOLOv8::DrawObjects(cv::Mat& bgr, const std::vector<Object>& objs)

{char text[256];for (auto& obj : objs) {cv::rectangle(bgr, obj.rect, cv::Scalar(255, 0, 0));sprintf(text, "%s %.1f%%", class_names[obj.label], obj.prob * 100);int baseLine = 0;cv::Size label_size = cv::getTextSize(text, cv::FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);int x = (int)obj.rect.x;int y = (int)obj.rect.y - label_size.height - baseLine;if (y < 0) y = 0;if (y > bgr.rows) y = bgr.rows;if (x + label_size.width > bgr.cols) x = bgr.cols - label_size.width;cv::rectangle(bgr, cv::Rect(cv::Point(x, y), cv::Size(label_size.width, label_size.height + baseLine)), cv::Scalar(255, 255, 255), -1);cv::putText(bgr, text, cv::Point(x, y + label_size.height), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0));}

}

//----------------------------------------------------------------------------------------- common.hpp

//

// Created by triple-Mu on 24-1-2023.

// Modified by Q-engineering on 6-3-2024

//#ifndef DETECT_NORMAL_COMMON_HPP

#define DETECT_NORMAL_COMMON_HPP

#include "NvInfer.h"

#include "opencv2/opencv.hpp"#define CHECK(call) \do { \const cudaError_t error_code = call; \if (error_code != cudaSuccess) { \printf("CUDA Error:\n"); \printf(" File: %s\n", __FILE__); \printf(" Line: %d\n", __LINE__); \printf(" Error code: %d\n", error_code); \printf(" Error text: %s\n", cudaGetErrorString(error_code)); \exit(1); \} \} while (0)class Logger: public nvinfer1::ILogger {

public:nvinfer1::ILogger::Severity reportableSeverity;explicit Logger(nvinfer1::ILogger::Severity severity = nvinfer1::ILogger::Severity::kINFO):reportableSeverity(severity){}void log(nvinfer1::ILogger::Severity severity, const char* msg) noexcept override{if (severity > reportableSeverity) {return;}switch (severity) {case nvinfer1::ILogger::Severity::kINTERNAL_ERROR:std::cerr << "INTERNAL_ERROR: ";break;case nvinfer1::ILogger::Severity::kERROR:std::cerr << "ERROR: ";break;case nvinfer1::ILogger::Severity::kWARNING:std::cerr << "WARNING: ";break;case nvinfer1::ILogger::Severity::kINFO:std::cerr << "INFO: ";break;default:std::cerr << "VERBOSE: ";break;}std::cerr << msg << std::endl;}

};inline int get_size_by_dims(const nvinfer1::Dims& dims)

{int size = 1;for (int i = 0; i < dims.nbDims; i++) {size *= dims.d[i];}return size;

}inline int type_to_size(const nvinfer1::DataType& dataType)

{switch (dataType) {case nvinfer1::DataType::kFLOAT:return 4;case nvinfer1::DataType::kHALF:return 2;case nvinfer1::DataType::kINT32:return 4;case nvinfer1::DataType::kINT8:return 1;case nvinfer1::DataType::kBOOL:return 1;default:return 4;}

}inline static float clamp(float val, float min, float max)

{return val > min ? (val < max ? val : max) : min;

}namespace det {

struct Binding {size_t size = 1;size_t dsize = 1;nvinfer1::Dims dims;std::string name;

};struct Object {cv::Rect_<float> rect;int label = 0;float prob = 0.0;

};struct PreParam {float ratio = 1.0f;float dw = 0.0f;float dh = 0.0f;float height = 0;float width = 0;

};

} // namespace det

#endif // DETECT_NORMAL_COMMON_HPP- yolov8.hpp

//

// Created by triple-Mu on 24-1-2023.

// Modified by Q-engineering on 6-3-2024

//

#ifndef DETECT_NORMAL_YOLOV8_HPP

#define DETECT_NORMAL_YOLOV8_HPP

#include "NvInferPlugin.h"

#include "common.hpp"

#include "fstream"using namespace det;class YOLOv8 {

private:nvinfer1::ICudaEngine* engine = nullptr;nvinfer1::IRuntime* runtime = nullptr;nvinfer1::IExecutionContext* context = nullptr;cudaStream_t stream = nullptr;Logger gLogger{nvinfer1::ILogger::Severity::kERROR};

public:int num_bindings;int num_inputs = 0;int num_outputs = 0;std::vector<Binding> input_bindings;std::vector<Binding> output_bindings;std::vector<void*> host_ptrs;std::vector<void*> device_ptrs;PreParam pparam;public:explicit YOLOv8(const std::string& engine_file_path);~YOLOv8();void MakePipe(bool warmup = true);void CopyFromMat(const cv::Mat& image);void CopyFromMat(const cv::Mat& image, cv::Size& size);void Letterbox(const cv::Mat& image, cv::Mat& out, cv::Size& size);void Infer();void PostProcess(std::vector<Object>& objs, float score_thres, float iou_thres, int topk, int num_labels = 80);void DrawObjects(cv::Mat& bgr, const std::vector<Object>& objs);

};

#endif // DETECT_NORMAL_YOLOV8_HPP- CMakeLists.txt

cmake_minimum_required(VERSION 3.1)set(CMAKE_CUDA_ARCHITECTURES 60 61 62 70 72 75 86 89 90)

set(CMAKE_CUDA_COMPILER /usr/local/cuda/bin/nvcc)project(YoloV8rt LANGUAGES CXX CUDA)set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++14 -O3")

set(CMAKE_CXX_STANDARD 14)

set(CMAKE_BUILD_TYPE Release)

option(CUDA_USE_STATIC_CUDA_RUNTIME OFF)# CUDA

include_directories(/usr/local/cuda-10.2/targets/aarch64-linux/include)

link_directories(/usr/local/cuda-10.2/targets/aarch64-linux/lib)

# find_package(CUDA REQUIRED)

# message(STATUS "CUDA Libs: \n${CUDA_LIBRARIES}\n")

# get_filename_component(CUDA_LIB_DIR ${CUDA_LIBRARIES} DIRECTORY)

# message(STATUS "CUDA Headers: \n${CUDA_INCLUDE_DIRS}\n")# OpenCV

find_package(OpenCV REQUIRED)# TensorRT

set(TensorRT_INCLUDE_DIRS /usr/include /usr/include/aarch-linux-gnu)

set(TensorRT_LIBRARIES /usr/lib/aarch64-linux-gnu)message(STATUS "TensorRT Libs: \n\n${TensorRT_LIBRARIES}\n")

message(STATUS "TensorRT Headers: \n${TensorRT_INCLUDE_DIRS}\n")list(APPEND INCLUDE_DIRS${CUDA_INCLUDE_DIRS}${OpenCV_INCLUDE_DIRS}${TensorRT_INCLUDE_DIRS}include)list(APPEND ALL_LIBS${CUDA_LIBRARIES}${CUDA_LIB_DIR}${OpenCV_LIBRARIES}${TensorRT_LIBRARIES})include_directories(${INCLUDE_DIRS})add_executable(${PROJECT_NAME}src/main.cppsrc/yolov8.cppinclude/yolov8.hppinclude/common.hpp)target_link_libraries(${PROJECT_NAME} PUBLIC ${ALL_LIBS})

target_link_libraries(${PROJECT_NAME} PRIVATE nvinfer nvinfer_plugin cudart ${OpenCV_LIBS})#place exe in parent folder

set(EXECUTABLE_OUTPUT_PATH "./")if (${OpenCV_VERSION} VERSION_GREATER_EQUAL 4.7.0)message(STATUS "Build with -DBATCHED_NMS")add_definitions(-DBATCHED_NMS)

endif ()- 原项目地址

https://github.com/Qengineering/YoloV8-TensorRT-Jetson_Nano